Process creation of a Grammarly-style App using Flutter and Gemini

We live in an era where artificial intelligence is experiencing major advances. As developers, these advancements open up a wide range of creative possibilities for us.

So, in this article, we're going to walk you through the overall strategy implemented to create a writing assistant that harnesses the power of Gemini's AI, using Flutter and Dart.

Even this introduction has been improved by the app we are going to explain. 😍

You can view this video for a quick overview about the app.

Prerequisites

You don't need to be proficient in Flutter to read this article, which is based on the strategy used to achieve a full application with Gemini. However, to understand the source code, you need to have a basic knowledge of Flutter & Dart, as well as Gemini API. I wrote an article on the subject.

Project's presentation

It's a writing assistant like Grammarly, integrating Flutter and Dart and using artificial intelligence via Gemini's API.

Whether you're a native speaker of a language or not, it can sometimes be difficult to produce correct. Whether you write slowly or quickly, you're likely to omit some words or write others incorrectly. That's where AI Writing Assistant comes in.

Here is what the application can do:

Correct grammar, spelling, and various other types of errors.

Support text correction in multiple languages depending on Gemini capability.

Specify the words that have been modified with explanations to help the user improve.

Provide correction explanations in 5 languages (English, French, Swahili, Lingala, and Hindi) to enhance understanding for individuals unfamiliar with the original language.

Suggest a better text while keeping the complete meaning of the original text.

Copy the text.

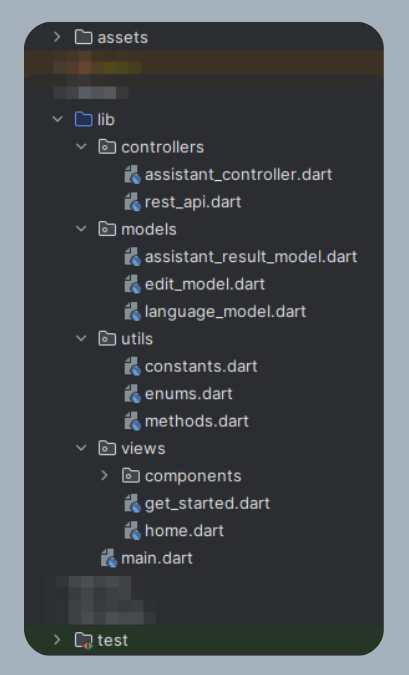

Code Architecture

The purpose of the application is to show the power of using the API, I adopted a basic MVC code architecture.

models

Contains the classes that represent the data used in the application.

AssistantResultModel: This is the class that represents the result returned by Gemini, the controller sends it to the UI after processing.

EditModel: represents a changed word or group of words, it contains the old value, the new value, and the reasons for the change in the different languages mentioned above.

LanguageModel: This class represents a language and is used in the UI to allow the user to choose the language in which explanations are displayed.

views

Here, there is everything that represents the UI. At the root, each file represents a screen, and the components folder includes the different UI components to have modular code.

controllers

This folder includes the controller that retrieves the prompt from the UI, calls Gemini, and returns the result as a model class.

You'll notice that there's an additional file, rest_api.dart, which contains the method accessing Gemini's API using cURL because a method used with Gemini 1.5 is not yet available in the Flutter SDK.

utils

Apart from models, views, and controllers, I've created this folder to gather various useful elements, such as constants, enumerations, and methods that are not specific to a particular model or controller.

User Interface

The app has two screens: an onboarding screen and a second screen where the entire process takes place.

The second page has four main parts:

The language selection area is where there will be explanations for the change.

The text editing area.

The tooltip on which the change of a word or a group of words is mentioned with the explanations.

The suggestion area where the suggestion for better text will be displayed if available.

For more information, check the Flutter source code for the UI only.

The text editing area

It's important to take a little more interest in the text editing area to explain the choices I've made.

We need a text editor when the user writes his text, a result display area with the edited words represented as links that, once clicked, display a tooltip with the details of the change.

Developing all of this from scratch would have been complex and would have taken time, fortunately, there is a rich text editor called Quill.

It offers the possibility of having a configurable read-only text editor for displaying results and also allows to customize text styles, such as links.

It can format text from JSON, here is an example of JSON code that we can integrate into it:

[

{"insert":"I have "},

{"insert":"eaten","attributes":{"link":"0"}},

{"insert":" water and drunk "},

{"insert":"many","attributes":{"link":"1"}},

{"insert":" apples, I am "},

{"insert":"not","attributes":{"link":"2"}},

{"insert":" "},{"insert":"hungry","attributes":{"link":"3"}},

{"insert":" today but I will be tomorrow."}

]

Instead of using links, we'll use numbers representing the index of the changed word, which will make it easier to display the change information associated with the clicked word.

The flutter_quill package can be used to support the Quill editor in Flutter; For other technologies, there are certainly solutions, it's up to you to discover 😊 them.

We'll look at the strategy of asking Gemini to return values so that we can have the ability to transform them into this format.

Implementation

We will make Gemini respond to us with JSON, to have all the data we need in a single request.

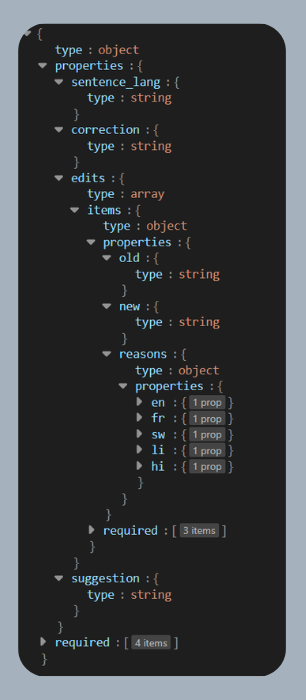

Data structure

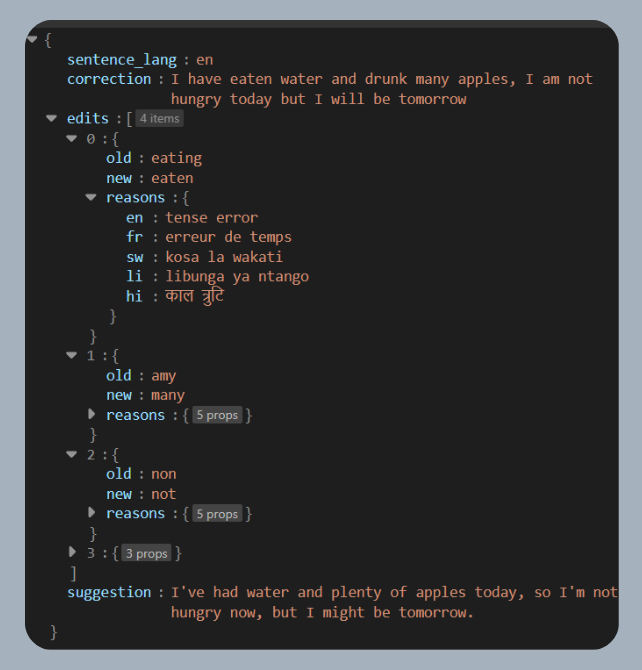

Here's an example of how we want Gemini to respond:

As you can see, we have all the necessary data: correction will contain the corrected text, edits will group the modified words, their old values, and the reasons for the changes in the different languages, and finally, suggestion will contain the text suggested by Gemini.

Thanks to all these details, we are now able to convert the text to Quill format ourselves to integrate it into our editor. Here's an overview of how to convert to Dart (Don't worry if you don't understand it, that's not the main purpose of this article).

List<Map<String, dynamic>> textToQuillDelta(String text, String oldText, List<EditModel> elements) {

List<Map<String, dynamic>> delta = [];

int index = 0;

for (EditModel element in elements) {

try {

Iterable<Match> matches = element.newText.allMatches(text);

// in case of multiple matches, we the correct one is the one that the old text index difference is the smallest

int minDifference = 1000 + element.newText.length;

int startIndex = -1;

int oldTextIndex = oldText.indexOf(element.oldText);

for (Match match in matches) {

int difference = (match.start - oldTextIndex).abs();

if (difference < minDifference) {

minDifference = difference;

startIndex = match.start;

}

}

if (startIndex != -1) {

String beforeText = text.substring(index, startIndex);

if (beforeText.isNotEmpty) {

delta.add({"insert": beforeText});

}

delta.add({"insert": element.newText, "attributes": {"link": elements.indexOf(element).toString()}});

index = startIndex + element.newText.length;

}

}

catch (err) {

debugPrint('===> Failed to find word: ${element.newText} - $err');

}

}

String remainingText = text.substring(index);

if (remainingText.isNotEmpty) {

delta.add({"insert": remainingText});

}

// add a newline at the end as it is required

delta.add({"insert": "\n"});

return delta;

}

Although the method may seem long 🙈, it is designed to prevent potential conflicts, especially in the case of identical words, only one requires modification. In such cases, we refer to the position of the old value in the user's original text to ensure that the appropriate word is replaced.

During my initial testing phase, I utilized Gemini for automated Quill conversion, but the results proved inaccurate despite several prompt modifications. Consequently, I opted for manual conversion to ensure greater precision. Nevertheless, Gemini remains an invaluable tool for my requirements 😊.

While artificial intelligence offers immense power, it's not meant to solve every problem.

Gemini 1.0

Usually, Gemini responds with text like in a chat. Since we need it to respond in JSON format, I had to use the Structured Prompt method (see my article on Gemini for more détails).

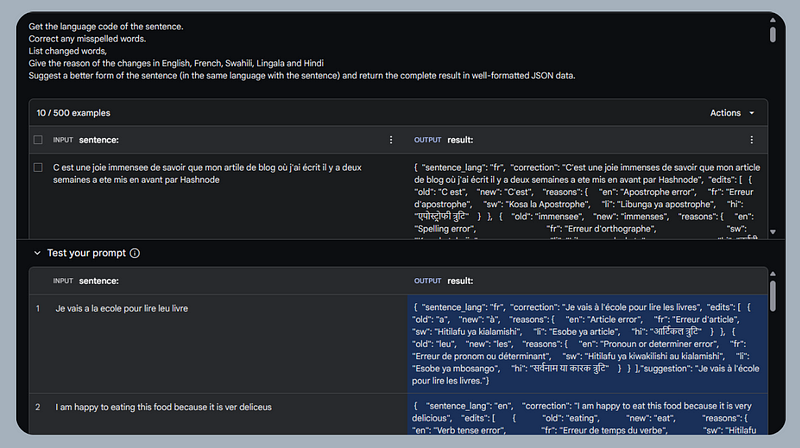

Google AI Studio

It can be quite complex to set up a structured prompt, which is why it's important to start by creating a prototype with Google AI Studio.

We provide instructions and sample inputs/outputs then we let Gemini send the answers back to us in the desired format.

I had to do a lot of tests to get satisfactory results. You can import this data from this file in your Google AI Studio console to see how it looks like.

Application

To transition from prototype to reality, it's crucial to utilize the SDK that aligns with your chosen technology. As previously stated, this article's focus is not on the technical intricacies.

Here's a glimpse of the implementation in Flutter to illustrate the concept:

final List<Part> structuredParts = [

TextPart("Get the language code of the sentence. \nCorrect any misspelled words. \nList changed words, \nGive the reason of the changes inEnglish, French, Swahili, Lingala and Hindi\nConvert the correction in quill delta with all edited words as links with their index as value.\nCheck that the number of the links in corrextion_quill are equal to number of edits, if not, fix that\nSuggest a better form of the sentence (in the same language with the sentence) and return the complete result in well-formatted JSON data."),

TextPart("sentence: C est une joie immensee de savoir que mon artile de blog où j'ai écrit il y a deux semaines a ete mis en avant par Hashnode"),

TextPart('''result: { "sentence_lang": "fr", "correction": "C'est une joie immenses de savoir que mon article de blog où j'ai écrit il y a deux semaines a ete mis en avant par Hashnode", "edits": [ { "old": "C est", "new": "C'est", "reasons": { "en": "Apostrophe error", "fr": "Erreur d'apostrophe", "sw": "Kosa la Apostrophe", "li": "Libunga ya apostrophe", "hi": "एपोस्ट्रोफी त्रुटि" } }, { "old": "immensee", "new": "immenses", "reasons": { "en": "Spelling error", "fr": "Erreur d'orthographe", "sw": "Kosa la tahajia", "li": "Libunga ya ko kɔta", "hi": "वर्तनी संबंधी त्रुटि" } }, { "old": "artile", "new": "article", "reasons": { "en": "Spelling error", "fr": "Erreur d'orthographe", "sw": "Kosa la tahajia", "li": "Libunga ya ko kɔta", "hi": "वर्तनी संबंधी त्रुटि" } } ],"suggestion": "Je suis ravi(e) de voir mon article de blog, rédigé il y a deux semaines, mis en avant par Hashnode."}'''),

];

final response = await model.generateContent(

[

Content.multi(

[

... structuredParts,

TextPart("sentence: $input"),

TextPart("result: "),

]

)

],

);

Gemini 1.5

While developing this app, I discovered that Gemini version 1.5 is in public preview. Its new features, such as the specification of the output format, motivated me to explore further. Currently, this API feature is unavailable through SDKs, necessitating HTTP requests for its utilization. Refer to this article for a detailed explanation. Here is the query I used.

curl --location 'https://generativelanguage.googleapis.com/v1beta/models/gemini-1.5-pro-latest:generateContent?key=API_KEY' \

--header 'Content-Type: application/json' \

--data '{

"contents":[

{

"parts": [

{"text": "Get the language code of the \"YOUR INPUT\". \n

Correct any misspelled words. \nList changed words, \n

Give a very short reason of the changes in English, French, Swahili, Lingala and Hindi\n

Suggest a better sentence which should have the same complete sense that the original (in the same language with the sentence) \n\n

return the complete result using this JSON schema:\n

{sentence_lang: string\ncorrection: string\nedits: array [\n{\nold: string\nnew: string\nreasons: {\nen: string\nfr: string\nsw: string\nli: string\nhi: string\n}\n}\n]\nsuggestion: string}"}

]

}

],

"generationConfig": {

"response_mime_type": "application/json"

}

}'

Setting response_mime_type to application/json tells the API to return the response in JSON format, for example, you can put text/markdown if you want responses in Markdown format.

In the instructions, we didn't need to give examples, we gave it the JSON schema and it does the job as it should. 🦾

Here's a clearer illustration of the schema provided:

Before you get to code, you also have the option to run your tests with Gemini 1.5 via Google AI Studio.

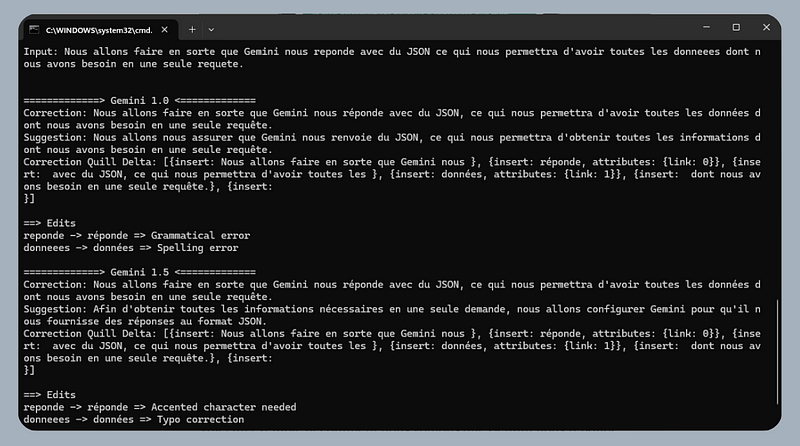

In this screenshot you can compare the results returned by Gemini 1.0 and Gemini 1.5.

If you are a Flutter developer, you can clone the GitHub repository by going to the project's root from CMD and launching the following command :

flutter test --name="Test check method" test/assistant_test.dart --dart-define=API_KEY=YOUR_API_KEY --dart-define=SENTENCE="YOUR_TEXT"

Here is the final demo of the application :

Source Code

You can find the full source code on GitHub. If you liked my work, feel free to leave a star to encourage me to share more articles.

References

🚀Stay Connected!

Thanks for reading this article! If you enjoyed the content, feel free to follow me on social media to stay updated on the latest updates, tips, and shares about Flutter and development.

👥 Follow me on:

Twitter: lyabs243

LinkedIn: Loïc Yabili

Join our growing and engaging community for exciting discussions on Flutter development! 🚀✨

Thanks for your ongoing support! 🙌✨